Capacity planning is an important factor to consider when creating a Cloud Strategy. This course will describe the important factors to consider when performing capacity planning.

First, you will learn about the technical and business factors to consider when performing capacity planning.

Next, you will learn about using standard templates, licensing considerations, user density and system load. You then learn how to use captured metrics to perform trend analysis and how to do performance capacity planning.

Finally, you’ll learn how to create a cloud-based database and how cloud features can be scaled to meet capacity demands. This course is one of a collection of courses that prepares learners for the CompTIA Cloud+ (CV0-003) certification.

Capacity planning is an important factor to consider when creating a Cloud Strategy. This course will describe the important factors to consider when performing capacity planning.

First, you will learn about the technical and business factors to consider when performing capacity planning.

Next, you will learn about using standard templates, licensing considerations, user density and system load. You then learn how to use captured metrics to perform trend analysis and how to do performance capacity planning.

Finally, you’ll learn how to create a cloud-based database and how cloud features can be scaled to meet capacity demands. This course is one of a collection of courses that prepares learners for the CompTIA Cloud+ (CV0-003) certification.

Table of Contents

- Course Overview

- Technical Capacity Planning

- Business Capacity Planning

- Standard Templates as a Factor in Capacity Planning

- Licensing as a Factor in Capacity Planning

- User Density as a Factor in Capacity Planning

- System Load as a Factor in Capacity Planning

- Trend Analysis as a Factor in Capacity Planning

- Performance Capacity Planning

- Creating Databases in the Cloud

- Scaling Resources

- Course Summary

Course Overview

CompTIA Cloud+ certification is targeted at IT infrastructure specialist audiences who want to develop multi-vendor skills in the areas of cloud computing, including the movement of applications, databases, workflows and systems to the cloud. This certification addresses system architecture, security, deployment, operations and automation and aims to prepare system administrators to perform the tasks required of their job, not just to rely on a narrow set of vendor specific product features and functions.

Capacity planning is an important factor to consider when formulating a cloud strategy. This course will describe the important factors to consider when performing capacity planning.

First, I’ll discuss The technical and business factors to consider. Next, I’ll cover Using standard templates, Licensing considerations, User density and System load.

Finally, I’ll walk through using captured metrics to perform trend analysis and how to do performance capacity planning.

Technical Capacity Planning

In this exercise, we’ll introduce some of the factors to consider when planning for capacity in a cloud environment. Beginning with the technical and business aspects. Now, straightaway, there are two primary considerations when it comes to capacity planning that can certainly make things much easier when it comes to operating in the cloud.

The first being the availability of resources. And by that, I mean, with respect to most major providers, from the perspective of any given tenant, your resource capabilities are virtually unlimited. Now, clearly, that will have an effect on the price.

But it removes many limitations that might be faced by an on-premises infrastructure by simply eliminating any inherent restrictions that might be forcing you to take an approach along a lines of, well, let’s do the best we can with whatever we have, if you will.

Secondly, you have as much ability to scale back as you do to scale up. In other words, since there are no infrastructure purchases to implement, if you feel like you went a little overboard with resource allocation, then just deallocate those resources. In almost all situations with cloud services, you only pay for what you use. So, if you don’t need the resources anymore, simply deprovision them. Nothing needs to be sold. No leases need to be terminated. No contracts have to be renegotiated. You simply deprovision the resources. So, clearly, this provides you with much more flexibility.

You should still try to make accurate estimates when planning for capacity, of course, in terms of the technical requirements. But the kind of flexibility offered by cloud services makes this much easier on the business side of operations. So then, if we look at some specific considerations on the technical side of things, if you’re looking to implement something like a new web-based ordering service for your customers.

Then, of course, the obvious might include the virtual servers necessary such as web servers and/or database servers. And of course, all of the associated resources of those servers, such as processing, memory, bandwidth and access to storage. And again, in an internal environment only, those who pay the bills are going to want to pay as little as possible.

While those who develop and administer are going to want as much as possible, within reason, of course. So, you need to be able to identify the balance between having enough resources available so that your solution isn’t being underutilized, while ensuring that you don’t go too far and end up wasting resources. In particular, you need to determine factors such as the Number of servers which, of course, is dependent on the load and needs to be Sufficient. But, you may not know what the load is going to be. So, there can often be a lot of guesswork in that regard.

Plus, of course, you always need to consider your Backup and general security requirements, as well, which also may not be known until the system is operational and you have a better understanding of what the workload will be. So, you still may not be able to eliminate a certain level of guesswork when it comes to implementing a new solution.

But, as mentioned, given the flexibility of resource allocation in the cloud, even if your estimates are way off in either direction. You can add more resources at any time, with no upfront costs and deprovision at any time with no other considerations other than knowing that your bill next month will be lower.

Still, planning your technical capacity should include as much information as possible using best guesses for applications and services that are new. But you may also be able to gain insight from previous or existing implementations that you do have in your on-premises environment, already.

For example, if it’s a matter of migrating an existing application or service to the cloud, as opposed to building a new one. Then you can use the existing one as a template to provide more insight into what will be needed when it is migrated. This will make it much easier to identify the requirements to allocate the resources as appropriately as possible to support the workload.

While still providing you with the ability to make changes, much more easily, should the need arise. But, again, if it’s a new application or service, there will still have to be some level of a best-guess approach. But inaccurate guesses can be far less of an issue when it comes to a cloud-based implementation. So then, with respect to managing whatever capacity is going to be implemented, whether it’s the best-guess approach of a new service, or the historical performance data of a service being migrated, a baseline value of capacity should always be determined. Which, as best as possible, should reflect what you consider to be normal in terms of its level of activity. Because this gives you a starting point. By knowing what is normal, you can assess what needs to be done in terms of scalability, if and/or when things start to deviate from the baseline. And, again, that can be in either direction.

Perhaps activity is far higher than what you anticipated, so, you need to allocate more resources. Conversely, perhaps it’s far lower and some resources can be deprovisioned. From the perspective of just the application or the service, itself, it’s a win either way. It’s either going to perform better, which will result in a more satisfactory experience for the users, or it’s going to cost you less going forward. That said, many applications and services offer the ability to auto scale on demand.

So, based on the activity level, resources can be added or taken away as needed, which lowers the level of administrative overhead and better strikes that balance between performance and cost, because you still don’t pay for resources when they’re not in use. Furthermore, any cloud solution offers the ability to monitor and gather metrics, so you can regularly assess its capacity and make adjustments, accordingly.

In terms of the physical resources themselves that need to be considered, they aren’t really any different than those you would have in an on-premises implementation. So, factors such as the hardware specifications of your servers, including processing, storage, memory, and many other characteristics, still need to be assessed. And to be clear, of course, you aren’t ever assembling a physical server in the cloud. There are always virtual machines.

But you can still choose the level of performance for each of these characteristics when configuring those virtual machines. Clearly, the cost goes up as you increase these values. But, again, you can always scale back at any time. The key component is to try your best to determine appropriate starting or baseline values, then adjust, accordingly. And because everything is virtualized, you can be much more specific in terms of resource allocation.

And take an approach of partial allocation, if you will, whereby certain performance characteristics may need more attention in the immediate term, then others can be dealt with later on. For example, you may know that your service will require a lot of processing, but you might not have a good idea as to the storage requirements. Conversely, if it’s more of an archiving solution, then you might already know that the storage requirements will be very high, but not that much processing will be required.

It all depends on the nature of the service, of course. But with cloud services, you have much more control over the amount of resources that are allocated and you don’t have to determine everything at the same time. In an on-premises environment, provisioning more resources might require the acquisition of additional physical infrastructure, which could take days, weeks, or even months. In the cloud, it takes minutes.

All of that said, you should still try your best to determine, as accurately as possible, what kind of capacity is going to be required before moving to the cloud for any kind of a solution. Because if you’re just guessing blindly, it will still require a lot of overhead and management before you settle into the correct configuration.

Business Capacity Planning

In this exercise, we’ll examine Capacity Planning more so, from the Business perspective. Which, of course, will always relate to Technical Capacity Planning. Because, whatever the technical requirements are for any given solution, they will ultimately affect your business operations and of course, your bottom line.

But technical requirements only refer to what is needed to insure that a service runs appropriately. Whereas Business Capacity Planning refers to the maximum amount of work that your business is capable of completing within a given timespan. For example, you may have perfectly determined all of the technical requirements and planned everything out to the letter.

But the solution still has to be implemented, which now, of course, requires human resources as opposed to technical resources. And this needs to be considered in more than just the immediate term because of course, development and implementation of a solution are only the initial phases. So, it’s not just a matter of identifying the human resources to bring it to production. You also need to identify what will be required to maintain it as it changes and evolves over time.

Again, technical resources will always be a part of the equation. But you also need to try to assess what may happen in the longer term. If, for example, this is your first implementation of a cloud service and everything goes very well.

Will that indicate that more and more services will be migrated to the cloud, or will it just be for this solution? And what will that mean for your business? Will the ease of implementation facilitate new services that will require additional staff? Or will the lack of infrastructure result in fewer staff since there is less to maintain? Again, it’s all part of the capacity equation.

So, when attempting to assess the business capacity of moving to the cloud, it can be useful to examine some key factors of your existing on-premises environment first, if applicable, of course, including your own capacity. Are you already approaching the limits of your infrastructure or are there resources to spare? With respect to what you do have in place, what are the usage statistics?

And how do they change over time? In other words, are there noticeable and predictable times where usage is low versus high? And how do you anticipate that will change? Are your operations tending toward expansion or are you looking to downsize? And, of course, stakeholders are going to want to know what the return on investment will be if a move to the cloud is being considered. Particularly if large investments in your current infrastructure were recently made.

Based on your assessment of your existing capacity, you can then compare that against several aspects of cloud services. Including Service Level Agreements whereby a certain level of performance must be maintained by the provider.

Which of course, may not have always been possible in your internal environment. Then in terms of what should or should not be placed in the cloud, your own utilization characteristics, along with any workload analytics may help you to determine which services should be moved to the cloud first.

Based on how well they were utilized and or why any of those usage characteristics might have changed. Plus the requirements of any given application can also help you to determine what should be placed where. For example, if better or easier accessibility has been identified as a significant concern. Cloud-based applications are accessible from any location on virtually any device that has access to the internet without the need for a VPN or other remote solution. If however security has been identified as the primary concern, then perhaps this might suggest that an application should remain within your internal environment.

You’ll also still need to consider resource allocation. Again, not having enough resources in the cloud is rarely a problem. But you still need to ensure that the resources can be allocated in a manner that aligns with your business processes. For example, who makes the determination as to when a resource allocation needs to be changed? And what are the thresholds when they do have to be changed? What kind of approval process might be required and how often are the needs assessed? Data management policies can be a significant consideration as to what kind of capacity your cloud implementation may need. As once data of any description is stored in the cloud, it is no longer under your physical control. Now, this doesn’t mean that it isn’t secure, but this can still present a significant hurdle for many organizations.

Disaster recovery still needs to be considered as well because every application or service that is migrated to the cloud will need some kind of recovery strategy along with it. Which will almost certainly increase your capacity requirements, as will any anticipated growth. Particularly if the use of cloud services is what is enabling that growth to occur in the first place. Regardless of planning for capacity from a technical perspective or a business perspective, each will affect the other. So, it’s important to be sure to coordinate your efforts so that both perspectives are being considered before any implementation is performed.

Standard Templates as a Factor in Capacity Planning

In this exercise, we’ll examine the use of Capacity Planning Templates to help determine the needs of your organization when considering a move toward cloud services. Now, any given template might vary with respect to its format. But in general, you can expect to find three primary sections including an executive summary, a strategy for adoption and a plan for implementation and we’ll take a look at each one in just a moment.

But some of the key considerations when working with a template and determining capacity requirements should include the business drivers. In other words, what needs have been identified that currently cannot be met with your current infrastructure.

The key performance indicators or KPIs of your business that suggest that cloud services might provide the solution to those current needs and an outline of what the capabilities of the desired services are. Or perhaps how the capabilities of a cloud implementation can improve your business operation as compared to your existing services and infrastructure.

So, coming back to each section of the template. In the executive summary, this should typically list the business priorities that represent the challenges that need to be addressed. The timelines for adopting new services to address those challenges, any milestones that will be achieved. And a breakdown of any individual projects that will need to be undertaken and any criteria for those projects, such as the sequence and the resources required to complete them. In general, the executive summary should address the question, why do we need this and how will we get there?

Which, of course, will always be a concern to the stakeholders. The strategy should typically be presented from the perspective of here’s where we are and here’s where we need to be, for lack of a better description. And should include components such as the motivation and drivers behind the decision to move toward a cloud implementation. The expected business outcomes and the justification behind those outcomes.

In other words, you generally shouldn’t just state something like it’ll cost less without saying here’s why it’ll cost less. Perhaps outline the first adoption or a pilot project and identify the key stakeholders at that stage. For example, the initial project might only affect a certain division of the organization, so, identify who is going to be affected. And the plan sounds somewhat similar to a strategy, but a strategy is more of a general approach that states we need to do this.

The plan then addresses the question, okay, how do we do that? So, it should outline features such as your current technology assets to identify where adopting cloud services can address any shortcomings. Identify the readiness of your personnel and their ability to work in the cloud. How cloud services can align with your organizational and/or operational requirements. And more of a step-by-step adoption plan that indicates how the move toward cloud services will actually proceed.

Now, whether this level of detail is necessary will, of course, depend on the nature of your organization. But using capacity planning and standard templates can help to organize all aspects of a migration toward using cloud services. Particularly so, for a large organization that has already made significant investments in infrastructure and has been operating for a long time without using cloud services.

Clearly the stakeholders will want to see clear justification for making such a move. So, careful planning and the use of templates will help to make your case much more effectively.

Licensing as a Factor in Capacity Planning

In this exercise, we’ll examine several Licensing Models that you might encounter in a cloud environment. Which can also help you to determine your capacity needs as you consider a move toward cloud services or as you consider new services after you’ve already begun using the cloud. Now, the license models that are available are going to depend on the provider, of course, as well as the type of resource, service, or application that you need. But by becoming familiar with most of these options, it should help to determine the appropriate licensing structure for any situation.

So, the first type of licensing is simply a Subscription-based License, which can usually be paid monthly or annually. So, it gives you some flexibility in that regard. Although while a monthly payment might be more flexible because you can simply cancel your subscription at any time, many providers might offer discounted rates if you commit to an annual subscription. But, of course, that’s your call.

Now, in most cases, the subscription itself does not cost anything. In other words, you can go and sign up for a new cloud service right now and not have to pay a cent. But, of course, as soon as you create any kind of resource within that subscription, then you start incurring charges. But again, there are usually no costs associated with simply signing up. You just agree to the type of payment structure you’ll make and how that payment will be made, such as monthly by using a credit card.

So, then having created your subscription, the most common type of license from that point is pay as you go, which is exactly as it sounds in that you only pay for what you use. So again, if you simply set up your subscription, but then don’t do anything for let’s say a month, there won’t be any charges for the first month. But as soon as you create a resource, it will start incurring charges immediately. But even with that specific resource, it will still only charge you based on how much you’re actually using it.

Some examples might include a virtual machine that might charge a rate per hour based on the number of processors that you configure or disk usage. Or if it’s a database server, it would charge maybe based on the overall size of the databases or by how many queries it processes. Now, you typically won’t see this level of detail in your bill each month.

For instance, you likely would not see a breakdown of activity across each processor of a virtual machine or how many queries were actually processed by the database server. But it would give you a breakdown of how long the resource was in use for the billing period and a general idea as to how busy it was. For example, something like a virtual machine might list that it was active for 700 hours during the billing period at an overall resource usage level of x dollars per hour.

But if there were periods during which the virtual machine was not running, you would not see charges at all for those periods. Which can certainly be helpful in the early stages of implementation when you’re just doing initial setup and testing and configuration.

As long as no one is using a resource like a virtual machine, you can shut it down and it will not incur any charges, so that will help to save some money.

A more specific implementation of pay as you go licensing that you might encounter is pay-per-instance licensing. Which can be common in infrastructure as a service and platform as a service models whereby you don’t necessarily pay by specific resource usage such as processors.

Rather by each instance of a server that the provider provisions for you. You still only pay for the instances you use. But as an example, if a developer is building a custom application for something like a Web-based Ordering System. They can define the requirements of the underlying servers that are needed in the specifications of the development project. And then the provider can automatically spin up the necessary virtual machines as per the developer’s specifications. But that configuration might not be static.

In other words, as demand increases on the service, new servers can be provisioned automatically and deprovisioned when they’re no longer needed.

But neither the developer nor an administrator has to manage those servers in any way. The developer is only interested in subscribing to the services that the server can provide such as the web services, but the server itself is managed entirely by the provider.

So again, it’s not something like a virtual machine that you log into using something like Remote Desktop Services to configure it. The provider literally creates the server and you simply subscribe to its services.

But in terms of billing, of course, your costs each month will still be based on how many instances were required for that total billing period and for how long any given instance was in use. Per User Licensing is based on the number of user accounts that you create in a directory service in the cloud, typically for when those users need access to applications.

Each user would be represented by a unique ID so that usage of various services can be tracked and monitored for billing purposes. But once they have their account, they can start accessing multiple instances of various applications and services across multiple devices if applicable. And if desired, you can also define a specific license period, which can be useful for term contracts or short term projects. Or you might have an open-ended license that is simply used until it’s no longer needed.

A common example of user licensing is the use of Microsoft’s Office 365, which can provide users with cloud-based services such as email, collaboration and sharing services, video conferencing and calling applications and many others. But each user requires an account in Microsoft’s Azure Active Directory which represents the user ID. But from that point, you then assign an Office 365 license to any user who needs one.

And again, you only pay for the licenses that are issued. Simply having the user account doesn’t incur any charges at all. A similar approach is Per Device Licensing, which might be a little more applicable if there are users that share devices, such as in a shift work environment.

If, for example, three users all use the same tablet during a 24 hour shift, then you can apply the license to the device rather than to each user. This requires the device to also register with the directory service, which assigns it a device ID instead of a user ID. But from that point, the device can in fact be used by an unlimited number of users as the usage is tracked by the device, not by the user.

Now, you may also encounter more specific licensing scenarios that will depend on exactly which type of resource is being configured. But an example might include configuring a very specialized virtual machine which might require a separate licensing model due to its specificity.

But in that event, you might find the licensing based on the processor configuration. And there are two basic approaches, per socket and per core. And we’ll come to the per core model in just a moment. But one quick note, of course, this is still a virtual machine. So, you aren’t dealing with physical processors, sockets and cores. But in the configuration of that system, you can choose what kind of physical configuration to emulate.

It’s then up to the provider to ensure that your processor configuration is met. But from your perspective, you can think of it as just submitting a work order to assemble a physical server as per your specifications.

So, the per-socket model is based on the actual socketed processors on the motherboard and processing cores are not counted in this model, nor are sockets that aren’t used.

For example, a system might have two physical sockets but only one actual CPU, in which case only one license would be issued. But as you might imagine, the per core license is based entirely on the number of processing cores. And one license is required for each core regardless of the sockets.

Now again, it’s just an emulated configuration of a physical server. But in any virtual machine, you can specify the number of virtual cores that you want, which again simply determines the number of licenses required.

Now again, I do want to stress that both per socket and per core licensing will likely only be encountered for very specific server configurations. And in fact, you still might not see it at all with some providers.

They will certainly give you many options as to the configuration of your servers, but they’ll likely just charge out more per hour as the resources required for that system increase.

But it is something that you might encounter. And just to finish up, I should also mention that in many cases, you can configure servers that already have any desired software on them already. For example, in Microsoft’s Azure environment, you can create virtual machines that are already running the desired operating system and something like SQL Server for hosting databases.

In which case, you will still pay more for the software license for SQL Server, but you don’t have to worry about actually purchasing the license itself. It’s simply worked into the price each month. But if you already have a license for your on-premises environment, which is likely already a per socket or a per core license.

Then you can use that for the virtual machine that you’re setting up and simply match the processor configuration, but then you won’t have to pay for the software license. It’s already your license.

The configuration wizard simply presents you with an option to supply your license key, which will avoid any additional charges. So again, the licensing situation that you encounter is certainly going to vary.

But in many cases, it’s very easy to work with because you simply specify I want this service or I want this application. And the licensing is all worked into the price.

User Density as a Factor in Capacity Planning

In this exercise, we’ll examine the effect of User Density on your Cloud Services, which needs to be considered with respect to what kind of infrastructure requirements will need to be in place to support the service you want to be able to offer your employees, your customers or both.

So, in this context, the user density refers to the number of concurrent user sessions that you anticipate will be happening for any given service. Which of course affects the size of the resources needed to support that level of service.

Now, this is applicable for a wide range of services, of course. But if you consider something like a web-based ordering system for a large online retailer, you might expect to have millions of orders per day, maybe thousands at any one time.

If however you contrast that with an ordering service for a local supplier, who might only service a limited region, then you might only expect hundreds of orders per day with maybe only a few concurrent sessions. So, even though it’s the same type of service, clearly, the size of the virtual machines supporting this service will have to be appropriate to the workload due to the user density.

So again, the user density clearly has an impact on what kind of configuration you will need in terms of hardware and I’m sure that’s no surprise. But of course, when using cloud services, you’re dealing with virtualization, not physical hardware. But from the perspective of the service that you want to provide, it is still the hardware configuration that matters.

So, when you’re setting up a new virtual machine, of course you have the option to choose what kind of resources that system will have in terms of processing, memory and storage.

And depending on the provider, there may also be pre-configured systems that are designed for a specific type of workload. For example, there would likely be options for different types of web servers or database servers with varying operating systems and software platforms already implemented.

And a general hardware configuration in place that can likely still be customized to fine tune your requirements such as simply adding more memory or more processing but still using that same preset configuration otherwise. Now, that said you also have to consider the level of control that is available to you as a customer. Ultimately, the actual hardware is managed by the cloud provider.

So, your options likely aren’t going to be unlimited in terms of any preset configurations. But most major providers do at least offer several different levels or several different configurations that you can choose from, but there’s no guarantee that they will be able to offer the specific configuration that you’re going to need.

So, you may have to compare the service offerings of several providers and towards that end, it may even be necessary to maintain multiple subscriptions if one provider can’t service all of your needs. So again, simply trying to determine as best as possible what your service requirements are going to be with respect to the user density and several other factors.

Then compare that with what is being offered by the cloud service provider to see if they match up. And if it’s perhaps a case of migrating an existing on-premises services to the cloud, then you might be able to use your existing infrastructure as a guide with respect to the level of service it was able to provide.

And though it’s possible that your choices may be somewhat constrained, recall that this is still an infrastructure as a service or IaaS approach, meaning that at least none of the underlying hardware is your responsibility.

No part of the actual hardware is ever exposed to the customer, nor do you ever need to manage it. So, even if the provider might not offer exactly what you need, there is at least a trade off in that you know that none of it is your responsibility in terms of maintenance and upkeep.

So, if something that you do implement doesn’t quite seem to work, you can just decommission everything and move on to a different option. You might have lost some time, but you didn’t have to make any investments at the physical infrastructure level, which is always a possibility when dealing with an on-premises solution.

When it does come time to implement any given virtual machine then, again the user density is going to have a direct impact on the level of processing required. And of course, the amount of memory and there are a number of online calculators that are available as well.

Perhaps some right within the portal of the cloud provider when creating a new virtual machine that can offer you some guidance with respect to these values. Based on the anticipated user density for example, it might tell you that you should allocate 1 gigabyte of RAM for every 100 concurrent sessions.

Now, that’s just an example, of course, so that could vary greatly depending on what the service is. And there might also be features that you can add, such as hyperthreading, which can reduce the level of density overall by executing multiple processes simultaneously.

But it’s not out of the ordinary to encounter a certain degree of inconsistency when assessing performance based on values such as user density, because all sessions are not going to be equal, they won’t all have the same properties.

So, in general, you need to take these varying conditions into account and generally error on the side of caution. Ultimately it can still involve a certain level of using a best guess approach at least in the early stages, but having a good idea of user density really is one of the best indicators as to the level of performance any given service will need to provide.

System Load as a Factor in Capacity Planning

In this section, we’ll examine the effect System Load has on planning for capacity, which will involve one of two approaches depending on whether you’re migrating an existing service to the cloud or building a new one from scratch.

Now, the same considerations need to be taken into account in either case. But of course if you’ve already been running the service internally, then you can assess its characteristics from that instance. But if it’s a new implementation, then clearly some estimates will be needed.

But those characteristics should include the overall performance, and what kind of resources are necessary to support the desired level of performance, the demand on the service, the usage patterns.

And predicting what might be needed in the future as the service grows and develops. So, in determining those values, capacity planners should try to identify the most critical components to support the service with particular respect to any restricted levels of performance, for example due to existing physical constraints. Perhaps you might have identified that for a desired level of performance for an application.

A server may be needs at least 8 gigabytes of available memory, but the current server might not have that much available. So, you’ve identified a component where additional capacity will be required. Then of course assess what kind of impact that will have on the service if and/or when that change is made, as some changes will have more of an impact than others.

While determining those values though, bear in mind that the capacity planner needs to focus on the capacity required to meet the demands of the workload for the immediate term and likely for the anticipated future, but this is still planning.

In other words, the focus of the planner should not be on optimization of services. That deals with simply improving the rate at which a workload is performed. The capacity planner just needs to ensure that the workload itself can be supported. Fine tuning its performance is a task for administrators further down the road. Toward that end, the efforts of the capacity planner should focus on how unique the service is.

In other words, is it for a very specific process that is only going to be used by maybe one department or division or is it something that will be used by the entire organization. This directly translates into determining the anticipated load on the system, and possibly identifying situations that might result in an overload on the system. So, as mentioned, if it’s a new system, a little bit of estimating and/or hypothesizing various scenarios might help with determining your needs.

But once all of that has been taken into consideration, then it’s time to deploy. Once the service has been deployed, then you might imagine that the planner has nothing left to do. But recall that part of planning is determining future requirements as well. So, as soon as deployment is complete, your attention should turn to determining a baseline level of performance, which involves noting the initial conditions of deployment such as the number of virtual machines that might be required or the number of users accessing the service.

Then monitoring and recording what is considered to be the normal levels of activity through varying conditions such as the time of day. For instance, when the service is being used heavily versus when it’s being used very lightly. Then of course note any changes over time, by comparing the overall level of performance, maybe 6 or 12 months post deployment to its initial state.

You can more easily determine the requirements to support the service moving forward. That said, determining exactly what those changes are so that you can compare to the baseline may require some specific load testing as well.

So, collecting specific metrics from the current implementation can help to provide the planner with sufficient information to indicate exactly where increases to the load have occurred, which in turn indicates where more resources need to be allocated to support those changes.

So, load testing may need to examine specific conditions or values such as what is the current maximum load capability of the service? Where any bottlenecks might be occurring? Exactly what changes need to be made to the configuration? And possibly if the implementation of a cooperative system could alleviate some of the load from the current servers.

Now again, much of this might sound a lot like it has to do with performance tuning. And in many cases, the same procedures will be used when performance tuning is being done. But from the perspective of the capacity planner, it’s being done to simply ensure that there are enough resources available to support the demands of the service.

From that point it’s up to those who implement and administer the system to allocate and configure those resources in the most effective manner possible. You might imagine this process not unlike a budget.

Whereby an annual budgeted amount is allocated to a particular department by the board of directors. But then the board of directors would just sign off on that amount. It is then left to the department heads to decide how best to spend that amount.

Trend Analysis as a Factor in Capacity Planning

In this section, we’ll take a look at how you can use trend analysis to approach capacity planning, which primarily involves the assessment of your current capacity, and identifying how existing services have been utilized over time, to determine what kind of changes will be needed as your services evolve and grow.

Now, generally the focus of your analysis will be on the utilization of measurable metrics including processing, storage requirements, which software applications are being used most often and network bandwidth considerations. Because these are the same metrics that will be required to support new services, or perhaps just configuration changes to your existing services to meet new demands.

But knowing those existing metrics is necessary because trend analysis is the process of examining what has been happening in order to predict as accurately as possible what will happen. So, by assessing the trends of those metrics, it can help you to determine what will be necessary in the future.

And it’s worth noting at this point that there is often a default assumption made that the trends will indicate that more resources will be needed to accommodate growth. But of course, things can go in the exact opposite direction as well, which is not necessarily a bad thing.

Perhaps the trends have indicated that a particular service for which you’re still paying is becoming obsolete. So, by realizing this, perhaps that service can be decommissioned, which of course can help to improve your bottom line.

But while specific metrics are required, the capacity planner also has to apply that to determining requirements over a much broader scope if you will, such as significant changes to infrastructure and the necessary components that might be required to support a new or changing service.

And of course, knowing the overall performance requirements from your trend analysis, and knowing what changes are required from your predictions, will help you to determine exactly what kind of infrastructure changes might be necessary. For example, if you have been running a service internally, your analysis using those specific metrics might have indicated that you simply don’t have the infrastructure in place to accommodate the anticipated growth of the service.

Therefore, your capacity plan might indicate some significant changes to your infrastructure up to and including a complete migration of that service to the cloud, which of course can represent a significant change to the structure of your organization. So, in some cases, knowing some simple metric values can result in significant changes.

And of course, don’t forget that trend analysis should also include the cost and if you’re dealing with an existing cloud service that is being considered for upgrades, or perhaps a new service is being implemented to replace an older one, then this process will certainly be a little bit easier because you can just go back into the billing history to determine usage patterns.

But this approach isn’t really applicable to a service that was previously being run internally, but you can still get an idea as to the capacity requirements based on internal usage statistics, so that you can at least estimate what the cost might be if the service were to be migrated to the cloud.

But in either case, the more information you can gather about its previous use, the more informed you’ll be with respect to making the best decisions going forward. Sometimes, it might involve a lot of estimates and guesswork but in many cases, knowing what has been happening is a good indicator of what will happen. So, trend analysis is a very useful tool for the capacity planner.

Performance Capacity Planning

In this section, we’ll examine our last aspect of capacity planning, which focuses specifically on performance. Now, not only is this generally one of the biggest concerns of those who consume a service. If it’s something that you’re providing for your customers, then in many cases you may need to be specifically aware of the level of performance because it may be under a service level agreement or SLA.

In that event, any drop in service below what is specified in the SLA could result in refunds to your customer, or of course, the possibility of losing that customer all together, if the performance issues are too severe for too long.

So, with respect to planning adequate capacity to meet the demands of those who will be consuming the service, you also have to anticipate what the capacity requirements are going to be when it’s released, while maintaining a cost effective implementation for your own organization.

Clearly, it’s easy to maintain capacity and performance if you can just continually throw more money and more resources at a service, but that’s usually not the case. Plus you also have to try to determine what the needs will be in the future as the service changes and evolves.

So then, performance capacity planning typically involves asking what is the expected usage? How is the workload going to be distributed? What is the predicted rate of growth? And where is the tipping point between meeting the current demands and needing to implement changes to meet increasing demands.

Now, depending on the situation, those questions might not be all that easily answered. For example, if this is a new service that is being implemented for the first time, then clearly all of these have to be estimates. But if it’s a case of an older service that is being upgraded, or perhaps it’s an on-premises service that is being migrated to the cloud.

Then in those cases, you’ll have historical data that can be used to assist with determining your capacity requirements, or at least you can begin gathering that information from your existing service, if you haven’t already.

Some of the particular values that you might be interested in if you do have an existing service to use as a baseline might include what the current response time is and what the overall level of utilization is for the resources that support it. What the maximum load of that implementation is, so that you can gauge how much more robust the newer implementation will need to be.

Whether the existing implementation was satisfactory in meeting the requirements of a service level agreement, or how often it fell below that acceptable level. And what kind of inhibitors might be in place that would prevent the current instance from being able to support the anticipated levels of the performance requirements for the newer instance.

So then, on the assumption that the current capacity will not be adequate to meet the anticipated demands for a new implementation. There really are only two ways to respond. If you’re particularly constrained, such as by budgetary concerns, then reconfiguration or tweaking the architecture might allow for some enhancements to the performance capacity but that approach would often yield minimum results unless significant flaws in the architecture were discovered and corrected. So, more often the solution will be to add more resources.

Now, clearly this will cost more and will probably take more time, but recall that in a cloud service, upfront infrastructure costs are not required. The resources are there. That’s not to suggest, however, that it will always be a simple matter of allocating more memory to a virtual machine or just adding more virtual machines.

The resource requirements could be significant, which could in turn require significant investments of time and effort. But like any commitment to your service offerings, you’re making an investment and at least the cloud resources themselves do not require any kind of immediate purchases.

But again, when it comes to adding more resources, the capacity planner has to be able to identify where those resources need to be allocated. And of course, the level of resources required which, as mentioned, in some cases, particularly for a new implementation, might just require a judgment call or a best guess approach.

But recall that in a cloud environment if those guesses are off, it’s far less of an issue to allocate more resources or deallocate if you overestimated. Once again, no upfront purchases required. And again, if you have an existing implementation, you can take measurements of that instance to use as a guide, or even if you don’t, you might be able to break it down into separate components to gauge the performance of specific processes.

For example, if it’s a web-based ordering system, you could configure a database server to process similar types of record input operations and queries to get a sense of the database requirements. And if you have the means you could also build mock ups in a lab environment to model the behavior of the eventual service and try to extrapolate performance metrics from your model to the real thing.

Now, all of that can clearly take some time and effort, so, the process of planning for the performance capacity itself should have some structure applied to it as well. Beginning with identifying the requirements as laid out by the service level agreement, then trying to define what each specific unit of work does to provide the level of service overall.

Then for each unit, try to establish the actual levels of service that it can provide and assess its maximum capacity and/or its own tipping point. Then, assemble all of that information into a plan of implementation, that also accommodates change and growth as the implementation progresses.

That said, always be mindful that almost every plan and are almost every execution of a plan will come up against some roadblocks that will hinder the implementation, including the use of non-standard technologies or workloads that may have to be implemented as workarounds.

Unreliable forecasts that might prevent a certain level of performance from being attained within budgetary or time constraints, or perhaps they call for an implementation that far exceeds the requirements.

Hence, it’s costing too much or it’s taking too much time to implement. There could be components that were only available from specific vendors that end up causing issues with compatibility or integration, or perhaps the modelling that you implemented in the previous stages just isn’t translating to production due to scaling issues.

Lastly, some common mistakes that can hopefully be avoided include using incorrect or inappropriate workloads as part of your analysis stages. In that they simply don’t end up producing accurate values to indicate the performance capacity requirements of the new service.

Neglecting to consider startup and shutdown times when determining an appropriate service level agreement, because complex services with a lot of moving parts can take quite some time to fully restart.

And with respect to uptime and availability, many service level agreements will specify values well into the 99.9% range, which only allows for 52 minutes of downtime per year. So, if a single reboot of a complex system takes 10 minutes then you can only reboot it five times per year, leaving you virtually no time for maintenance.

And finally, you might encounter a situation of too much data with just not enough analysis resulting in an incomplete picture of what you were dealing with in the first place, or just a lot of noise for lack of a better word, that is ultimately of no value.

So, planning performance capacity requirements can be a demanding task. But one final approach to keep in mind, particularly when using cloud services, is simply it’s better to be safe than sorry.

It’s typically easier to over allocate in the cloud, then just deallocate as necessary, again because there are no large upfront cost associated with adding more resources. So, this approach at least avoids not hitting the level of performance required by your service level agreements.

Creating Databases in the Cloud

Now, in this section, we’ll take a look at creating a database in the cloud. And this is only one example of course, but the reason why I’m choosing a database is because it is something that is quite scalable. So, in terms of capacity and planning, and adjusting for changes, it’s just a good example of some of the things that you can do when you are using cloud services.

So, I have already created one database. In fact, it’s right here, this Test_DB. And I’m going to use that in the next demonstration when we talk about reconfiguring for scalability purposes. But for the time being, let’s go ahead and create a new one.

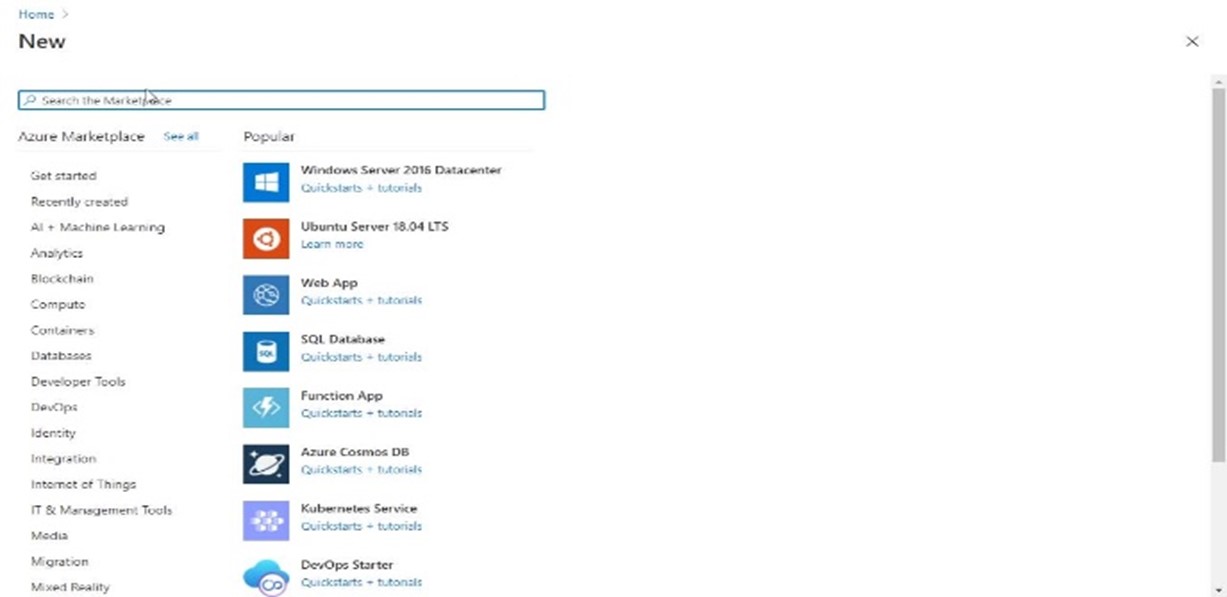

And again, I do want to point out that in this particular example, we are using Microsoft Azure service. But again, the general idea is pretty much the same across all cloud services. So, from my homepage, we can click Create a resource. And I can choose the category of Databases.

Now, they are a little more specific when it comes to databases, in that there is actually a term known as Database as a Service.

But the idea here is that not only am I not really going to see any kind of infrastructure, it’s not really a platform either. So, it’s not infrastructure as a service, and it’s not really platform as a service because it really is just for the database. So, I am literally creating only a database in the cloud.

I can use my local tools to connect to this database and work with it just like any other, but I do not need to manage the server. It’s not being used in conjunction with any other services, such as a website, at least not for the time being. So, it’s not really a platform for an application either.

Now, of course, it could be. It’s just, at this point, it’s just a database. So, I’m just using the software, if you will, of the database server to create a database for whatever purpose I need. So again, that’s just an example of what you can do with cloud services.

If I was in an on premises environment, I would have to provision a physical server, or maybe a virtual machine, but every virtual machine runs on some physical server somewhere. I would need to install the database software.

And I would need to administer everything with respect to the server, and the software, and the upkeep, and the maintenance, and everything, for the purpose of simply just creating a database. In a cloud environment, I literally skip all of that and go straight to the database.

So anyway, that’s just a little bit about using cloud services. So, in terms of creating the database, I already have a Resource group created that I can use and I’m really just going to go with very basic default settings pretty much across the board here, and some values are already available to me because I already created a database earlier.

And you do still have to create a database server to host the database, but it’s nothing that you ever see for lack of a better word. So, I don’t have to worry about creating a virtual machine running a specific version of an operating system that I then have to remote into and install database software.

I just have to create a server, and this is what I created for the previous database. I simply called it dlndbserv, and I assigned a region to it, that’s it. There were some basic specifications that you could define for the server.

But every database needs to reside on a server somewhere, so, I really just had to supply very basic values, and that’s it. This server is now available to be used for additional databases that I create from this point on. Okay, now these other properties about elastic pool, and Compute + storage.

I’m going to talk about more so in the next demonstration. But I do want to quickly point out the Compute + storage values here, and what’s known as a DTU. This is essentially the value in Microsoft Azure that determines the overall level of performance and capacity for the database. So, if I click on configure database here, you get a basic idea as to some settings in terms of the performance. So, there’s Basic, Standard, and Premium.

Standard for workloads with typical performance requirements. Premium for IO-intensive workloads. Now, this is not available to me because I’m using a free trial, okay? But that just gives you some starting points. But the DTU is what’s known as a database throughput unit and it’s really just an index value that indicates the level of capability of this database so I can simply drag the slider and place it wherever I see fit.

Now, again I’m using a free trial, so some of these higher values are not available to me, but in a full subscription you can absolutely set this to whatever you like. There is a link here that tells you a little bit more.

But overall, it is increasing the amount of memory, the amount of processing available, and the level of disk access that I will have. So again, you just set any value that you feel is appropriate, and it gives you the value itself over here and an indication as to the setting. Again, if you click on the What is a DTU?

link, it will certainly give you more information. But clearly the higher up you go, the better performing this database will be and the more capacity it will have. And you might also notice over on the right-hand side, it’s giving a general idea as to the cost as I increase the DTUs.

Okay, it’s also adjusting the maximum size of the database, but these can be adjusted independently of each other to a degree. Okay again, it depends on the subscription. It depends on the settings that you want, but all of this is simply meant to give you an idea about scaling. I can adjust the performance capabilities of this database.

Now, I’m just going to cancel this and leave it in its default state, okay? And we’ll just go ahead and review and create. All of the other settings are fine as they are, so, I’m just going to go ahead and create this.

The fact that you can adjust those settings depending on what you feel your capacity requirements are going to be. Now, in the next demonstration, we’ll talk about some of those other values that can be adjusted after the fact to accommodate for changes in the environment, but again, a database is a very good example of something that can be scaled based on what your demands are.

Scaling Resources

Okay, now, in this demonstration, we will take a look at how you can adjust the scaling demands for any given resource. And again, in this case, I’m using databases as an example. So, this is something that would be applicable to other types of resources, but it just gives you an indication of how you can adjust for changes when you are dealing with cloud-based resources.

So, earlier I created two databases, and within each database, you can still specify the performance characteristics based on what you anticipate the demand will be. But you don’t always know what the demand will be.

So, you might have to take a best guess and set a particular configuration and then see what happens. And that’s fine. Maybe you do that in some cases, and you make adjustments as you go. But there are options for certain types of resources that allow you to make those adjustments automatically on the fly, if you will.

And in the case of databases, at least in Microsoft’s Azure service known as elastic pools, and they allow you to pool the resources of your databases so that if one is being overutilized, it can quite literally borrow resources from one that is being underutilized and vice versa. So, in other words, they can share the resources of the pool and adjust their performance characteristics dynamically.

And it is already on the only server that I have configured. Okay, that’s fine. You might have more than one server in which case you could choose a different one. But with respect to the compute and storage now it tells me that it is based on an eDTU value. We did discuss the DTU in the previous demonstration which is Database Throughput Unit. And eDTU is an Elastic Database Throughput Unit.

Again, I’m using a free trial, so I’m a little bit limited here but in a normal situation, you can just set whatever value you like. And then you can specify the maximum size. But this now is for the pool. So, all of the databases in the pool will be able to draw from these settings. And once again, you have these preset configurations of Basic, Standard, and Premium.

Again, premium is not available simply because I’m in a free trial. But all I have to do now is go to the Databases page, click on Add databases and there are my two databases.

Add those in, and that’s it. Now I can still set limits on a per database setting.

So in other words, I can still specify that any one database. Will not have access to any more than a certain value if I want to. But that’s up to you. You don’t have to do that.

And as mentioned, if one of them starts to become overutilized, it can quite literally Borrow resources from the other, assuming that they are available. But that’s the whole idea is to ensure that you do have enough resources in the pool.

So, when you have a lot of databases and you aren’t really certain as to what the demands will be, for any given database, rather than having to try to figure out what each setting should be, you can just create the pool and allow the databases to draw from that pool. So as soon as I apply that, I can simply create that now and allow the databases to adjust dynamically with respect to the performance characteristics.

So again, this is very useful with respect to capacity. You can try to plan everything out as best as possible, but ultimately, you really don’t know until your databases or your other resources are in production. So, it can take a little time to figure out what the settings might be. But doing something like this pool based approach allows you to not have to worry about that so much.

And again, I do want to stress that this is only one example using databases but there are other resources that can be configured in similar types of pools so that if any particular resource is being overtaxed it has the ability to draw resources from somewhere else.

And that’s the idea of trying to find the best balance in terms of what your capacity is going to be with respect to the resources they will be able to access.

Course Summary

So, in this course, we’ve examined factors that contribute to capacity planning in cloud environments. We did this by exploring factors contributing to both technical and business capacity planning, standard templates, licensing, user density, system load and trend analysis, performance capacity planning. Creating a cloud based database, and how resources in the cloud can be scaled.